Deepfake Technology: Ethical Implications in the Digital Age

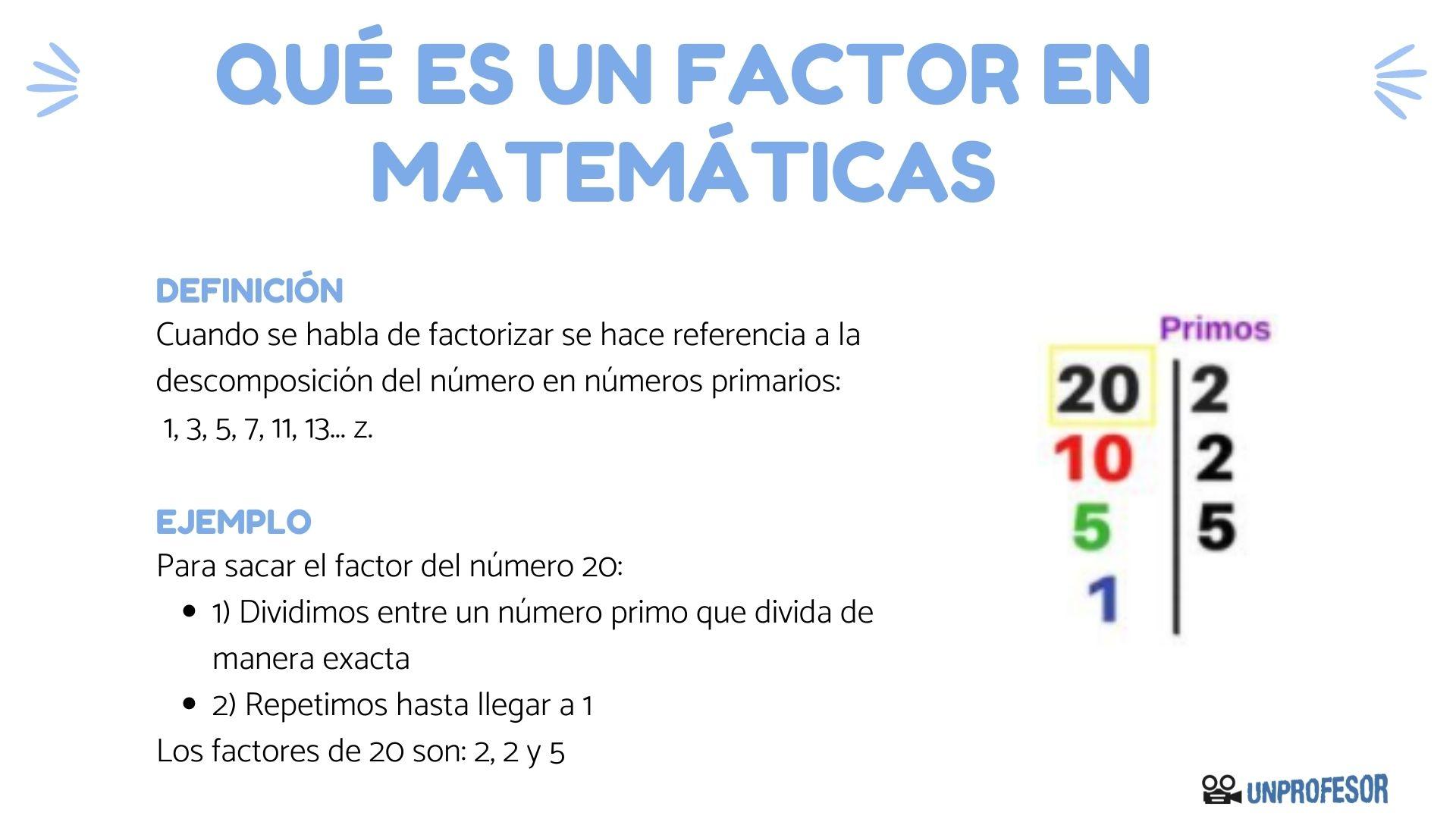

Understand deepfake technology

Deepfake technology represent one of the virtually significant developments in artificial intelligence and machine learning. At its core, deepfakes use deep learning algorithms to swap faces, manipulate voices, or create wholly synthetic media that appear authentic. These sophisticated AI systems analyze exist images and videos to generate new content that mimic reality with startling accuracy.

The term” deepfake ” ombine “” ep learning ” ” ” f” , ” hi” ight both the technical foundation and the potentially deceptive nature of the content produce. The technology work by train neural networks on vast datasets of images or audio samples of a target person, so generate new content that apply these learn patterns to different scenarios.

Modern deepfake systems can create videos show people say or do things they ne’er really say or do, with results thus convincing that distinguish them from genuine content become progressively difficult for the average viewer.

The ethical landscape of synthetic media

The emergence of deepfake technology has created a complex ethical landscape that society is stillness struggle to navigate. Unlike many technological innovations that present clear benefits alongside potential harms, deepfake raise fundamental questions about truth, consent, and the integrity of visual evidence.

Consent and autonomy

Peradventure the about immediate ethical concern involve consent. When someone’s likeness is used to create a deepfake, their image and identity are appropriate without permission. Thisrepresentst a profound violation of personal autonomy the ability to control how one’s image and voice are use and present to the world.

The non-consensual creation of deepfakes raise serious questions about digital rights. Does a person have ownership over their digital likeness? Should explicit permission be required before someone’s face or voice can beusede in synthetic media? These questions become peculiarly urgent when deepfakes arusedse to place individuals in compromise or embarrassing situations.

Truth and misinformation

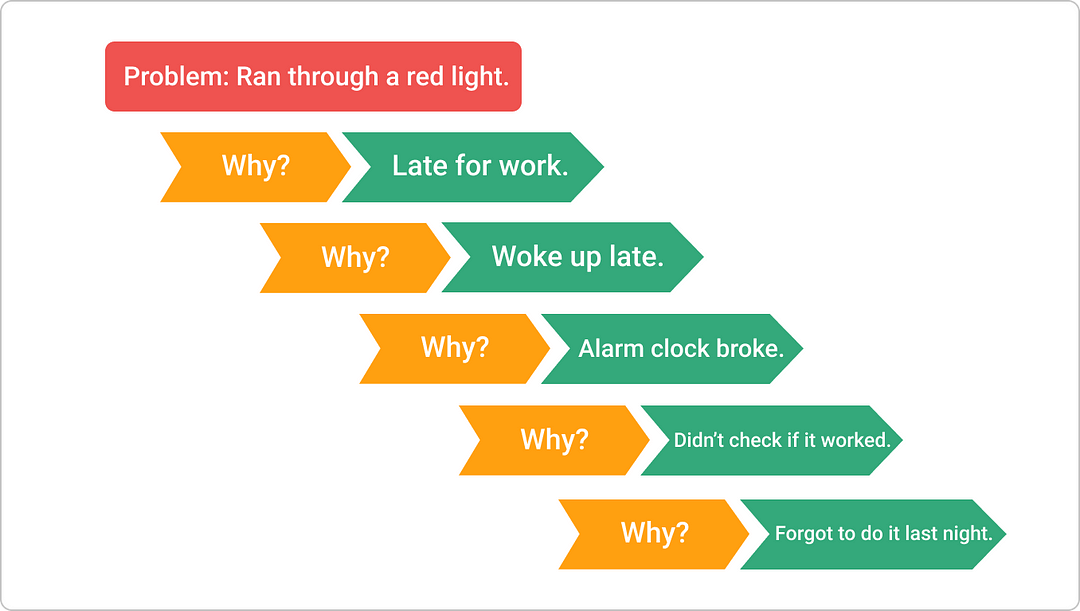

Deepfakes threaten to undermine the very concept of visual evidence. Throughout history, seeing has been believed photographs and videos haveservede as powerful evidence of events. The proliferation of convince synthetic media challenges this fundamental trust.

When deepfakes are used to spread misinformation or propaganda, they can distort public discourse and manipulate public opinion. A synthetic video show a political figure make inflammatory statements could trigger international incidents or influence election outcomes before the truth emerge.

This technology create what scholars call the” liar’s dividend ” phenomenon where genuine footage can be be dismissed fake, allow actual misconduct to bbe denied When any video can potentially bedismisseds as synthetic, accountability become more difficult to enforce.

Privacy implications

Deepfake technology raise profound privacy concerns. The creation of realistic synthetic media oftentimes require large datasets of images or recordings of the target individual data that may be harvested without knowledge or consent.

Moreover, the ability to create realistic simulations of individuals open new avenues for privacy violations. Deepfakes can potentially reveal what someone might look like in situations they’d ne’er consent to, create a form of privacy invasion antecedently impossible.

Deepfakes in different contexts

Political manipulation

The political arena represent one of the virtually concerning applications of deepfake technology. Synthetic videos of political figures make controversial statements or engage in scandalous behavior could sway public opinion, influence elections, or incite social unrest.

The timing of such deepfakes can be specially damaging if release instantly before an election, there may not be sufficient time to debunk the content before votes are cast. Still after being debunked, the emotional impact of see a convincing video can linger in voters’ minds.

Political deepfakes besides threaten diplomatic relations. A synthetic video appear to show a world leader make threats against another nation could escalate tensions before verification occur. The potential for deepfakes to trigger international incidents has raise concerns among security experts worldwide.

Non-consensual intimate content

One of the earliest and virtually disturbing applications of deepfake technology has been the creation of non-consensual intimate content. This represents a serious form of image base abuse that can cause profound psychological harm to victims.

Such content can damage reputations, lead to harassment, and cause significant emotional distress. The fact that to depict acts ne’er occur does little to mitigate the harm when viewers believe the content to be authentic or when the victim experience the violation of have their likeness use in this manner.

The psychological impact on victims can be severe, include anxiety, depression, and social withdrawal. Many victims report feeling violate in a manner similar to physical assault, highlight how digital representation is progressively intertwine with personal identity and dignity.

Source: oceanproperty.co.the

Entertainment and creative expression

Not all applications of deepfake technology raise ethical concerns. In entertainment and creative contexts, synthetic media offer new artistic possibilities. From bring historical figures to life in educational content to create new forms of digital art, the technology have legitimate creative applications.

Notwithstanding, yet in entertainment contexts, questions of consent remain important. Use a deceased actor’s likeness in a new film, for instance, raise questions about posthumous rights and the appropriate boundaries of digital resurrection.

Legal and regulatory responses

The rapid development of deepfake technology has outpaced legal frameworks, create regulatory gaps that lawmakers are nowadays attempt to address. Different jurisdictions have take varied approaches to manage the ethical challenges pose by synthetic media.

Exist legal frameworks

Several exist legal mechanisms can potentially address some harms cause by deepfakes. Defamation laws may apply when false representations damage reputation. Copyright law could address unauthorized use of images. Privacy laws in some jurisdictions protect against certain forms of image manipulation.

Yet, these exist frameworks were not designed with deepfake technology in mind and oftentimes prove inadequate. The global nature of digital content besides create jurisdictional challenges, as content create in one country can well spread to regions with different legal standards.

Emerge legislation

Several states and countries have begun implement specific legislation target deepfakes. These laws typically focus on particular harmful applications preferably than the technology itself. For instance, some jurisdictions have criminalizednon-consensuall intimate deepfakes or synthetic media intend to interfere with elections.

These target approaches reflect the challenge of regulate a technology with both harmful and beneficial applications. Comprehensive bans would stifle legitimate creative and educational uses, while narrow regulations may leave gaps that malicious actors can exploit.

Platform policies

Major technology platforms have developed policies regard synthetic media, though approaches vary importantly. Some platforms havimplementednt outright bans on certain categories of deepfakes, while others focus on labeling or contextualize such content.

The effectiveness of these policies depend intemperately on detection capabilities. Identify deepfakes at scale remain technically challenging, particularly as the technology continue to advance. This creates an ongoing technological arms race between deepfake creators and detection systems.

Source: media.csesoc.org.au

Technical solutions and detection methods

As deepfake technology evolve, thus overly do methods for detecting synthetic media. Technical solutions represent an important component of address the ethical challenges pose by deepfakes.

Digital watermarking and authentication

Digital watermarking technologies can help establish the provenance of genuine content. By embed invisible markers in authentic media at the point of creation, these systems create a verifiable chain of custody that can help distinguish original content from manipulations.

Several initiatives are work to develop authentication standards that would allow cameras and recording devices to cryptographically sign content at the moment of creation. These signatures would remain attached to the media as it move through the digital ecosystem, provide a mechanism to verify authenticity.

Ai base detection

Artificial intelligence systems train specifically to identify synthetic media represent another important technical approach. These systems analyze visual and audio artifacts that may not be apparent to human observers but reveal the artificial nature of deepfakes.

Nonetheless, detection systems face significant challenges. As deepfake technology improve, the artifacts that detection systems rely on become less pronounced. This creates a perpetual technological competition between progressively sophisticated deepfakes and more advanced detection methods.

Education and media literacy

Technical and legal solutions exclusively can not address all the ethical challenges pose by deepfakes. Build societal resilience through education and media literacy represent a crucial complementary approach.

Develop critical media consumption skills has become progressively important in a world where visual evidence can nobelium retentive be taken at face value. Educational programs that teach people to question the source of content, look for contextual clues, and verify information through multiple channels can help mitigate the harmful impacts of synthetic media.

Media literacy besides involve understand how deepfakes are created and the current limitations of the technology. Knowledge of common artifacts or inconsistencies can will help viewers will identify potential synthetic content, though this approach will become less effective as the technology will improve.

The future of synthetic media ethics

The ethical questions will surround deepfake technology will Belize will intensify as synthetic media become more sophisticated and widespread. Several will emerge trends will shape the future ethical landscape.

Democratization of the technology

As deepfake tools become more accessible, the barrier to create synthetic media continue to lower. What erstwhile require significant technical expertise and computing resources can nowadays be accomplished with consumer grade hardware anuser-friendlyly applications.

This democratization has both positive and negative implications. While it eenablescreative expression and innovation, it besides mean harmful applications become more widespread. The ethical framework for deepfakes must account for this increase accessibility.

Integration with other technologies

The combination of deepfakes with other emerge technologies present new ethical challenges. When synthetic media integrate with virtual reality, augment reality, or advanced voice synthesis, the potential for immersive deception increase dramatically.

These combinations could create altogether new categories of ethical concerns that current frameworks are ominous equip to address. Anticipate these developments require ongoing ethical assessment as technologies converge.

Develop ethical standards

The development of professional and industry standards represent an important step toward ethical use of synthetic media. Similar to journalistic ethics or medical ethics, establish share principles for the creation and distribution of deepfakes could help guide responsible innovation.

These standards might include requirements for disclosure when content has been synthetically generated, guidelines for obtain consent from individuals depict, and frameworksfor assessings potential harms before release content.

Balance innovation and protection

Peradventure the virtually significant ethical challenge surround deepfake technology involve balance technological innovation with appropriate safeguards. Excessively restrictive approaches could stifle beneficial applications and creative expression, while insufficient protection leave individuals and society vulnerable to serious harms.

This balance require nuance approaches that distinguish between different applications of the technology. Context matter importantly the same technical capability use in an artistic film raise identical different ethical questions when apply to political disinformation or non-consensual intimate content.

Find this balance demand ongoing dialogue between technologists, ethicists, legal experts, and the communities virtually effect by potential harms. As the technology continue to evolve, thus likewise must our ethical frameworks for managing its impacts.

Conclusion

Deepfake technology represent a profound ethical challenge for the digital age. By undermine the reliability of visual and audio evidence, it forces us to reconsider fundamental questions about truth, consent, and the nature of digital identity.

Address these challenges require a multifaceted approach combine legal frameworks, technical solutions, platform policies, and educational initiatives. No single intervention can full address the complex ethical landscape create by synthetic media.

As this technology continue to advance, society face the ongoing task of develop ethical frameworks that can adapt to new capabilities and applications. The questions raise by deepfakes extend beyond the technology itself to touch on fundamental values of truth, autonomy, and responsible innovation in the digital age.

MORE FROM ittutoria.net